Reviewed by James Harley (Sunland, California, USA)

Space. The coming of the new millenium must have brought with it some synchronous concerns, for the theme of space in music and audio has been the topic, in one form or another, of various gatherings. The annual meeting of the Society for Electro-Acoustic Music in the United States (SEAMUS) in March focused on spatial diffusion of electroacoustic music. The following weekend, a number of musicians and researchers met in Santa Barbara to explore "Sound in Space." The Institut de Recherche et Coordination Acoustique/Musique (IRCAM) also chose to focus on "space in music" as a special topic of its annual Summer Forum, held in June in Paris. And, of course, at the various Audio Engineering Society gatherings and related events, surround sound has become a hot issue, with new hardware and software being released at an increasingly urgent pace.

The Sound in Space 2000 Symposium was conceived by Curtis Roads and organized through the auspices of the Center for Research in Electronic Art Technology (CREATE) of the University of California, Santa Barbara (UCSB) with the help of JoAnn Kuchera-Morin, Stephen Travis Pope, and a number of graduate assistants. It took place over two days on the idyllic, lagoon-side UCSB campus. There were no formal concerts scheduled, but spatialized sound was very much in evidence, with an eight-channel "Creatophone" sound system installed in the symposium hall for the weekend. There were numerous demonstrations, entailing an at times overwhelmingly complex configuration of computers, instruments, sound cards, projectors, mixers, and playback equipment. To the organizers’ credit, everything ended up functioning as it should, albeit with occasional delays (though no one seemed to mind the additional brief opportunities to soak up some California sunshine).

When presenting research on sound in space, the sound system is obviously of crucial importance. The Creatophone is a spatial sound projection system of flexible configuration. For this symposium, the various inputs (ADAT, DAT, CD, computer with 8-channel output) were fed into a 16-bus Soundcraft mixer and then out through four Threshold stereo power amplifiers via Horizon, AudioQuest, and Tara interconnects and MIT and AudioQuest speaker cables to eight B&W Matrix 801 loudspeakers. The room, a rectangular lecture/recital hall wider from side to side, has tiered seating curved in a semi-circle around the small stage area. The loudspeakers were placed in a standard octagonal configuration with two in front, two in rear, and two each on either side. The best listening was found in the center, as one would expect, but many spatialized effects could be heard quite effectively from other locations as well. The superior clarity and power of the Creatophone sound system certainly enhanced the listening experience, regardless of seating.

The presentations at the symposium (abstracts can be found at www.create.ucsb.edu/news/space.html) can be grouped into four categories: historical/analytical, conceptual/compositional, technical, and demonstrations. There was a degree of overlap in the work presented, in terms of fitting into these categories. So, rather than grouping the papers into "sessions," the schedule was designed so that a theoretical discussion would be followed by a demonstration, which would in turn be followed by engineering research, and so on. It was, altogether, a lively symposium, with discussions spilling out into the gardens and terraces during breaks.

Curtis Roads (CREATE) opened the symposium with an historical review of sound spatialization for music, covering both physical and virtual systems. His presentation set the context for the weekend, along with the complementary paper by Stephen Travis Pope (CREATE), "The State of the Art in Sound Spatialization," which introduced a number of concepts and issues of the field. Frank Ekeberg (City University, London) laid out a framework for describing and understanding space as a structural element in music. His model, based in part on the spectromorphological approach to analysis developed by Denis Smalley (also at City University), defines sound objects in terms of "intrinsic," "extrinsic," and "spectral" space. Maria Anna Harley (University of Southern California) gave a lively discussion on the "signification of spatial sound imagery," taking the circle/sphere and net/web as paradigms of spatial design in contemporary music. She drew on a number of interesting, and occasionally amusing, examples from both electroacoustic and acoustic music. Frank Pecquet (Université de Paris I) argued for an aesthetic conception of space that is inherent to music, based on the simple, but oft-overlooked, observation that sound is naturally propagated through air (or other medium), and thus through space. He also played examples from his own music that tries to combine the spatio-timbral properties of instruments with amplification and electroacoustic sonorities. Finally, David Malham (University of York) balanced Mr. Roads’ historical opening remarks with a discussion titled "Future Possibilities for the Spatialization of Sound." In particular, he talked about the Hyper-Dense Transducer Array as a potentially "ultimate" sound spatialization system (see Figure 2).

Figure 1. Dave Malham, Sound in Space 2000 Symposium

While these presentations set the wider stage for the symposium, there were a number of others that focused on more specific compositional concerns. Gerard Pape (Centre de Création Musicale "Iannis Xenakis") gave the first of these, discussing the relations between form, space, and time in musical composition. A particular concern was the time-varying and topologically-varying possibilities for spatial composition. Pedro Rebelo (University of Edinburgh) proposed a novel approach to composition whereby the harmonic characteristics of the music are taken from the resonant properties of the performance space itself or a modeling instrument. Larry Austin (Denton, Texas) discussed his new realization of Williams Mix by John Cage. This octophonic work from 1953 was originally performed from eight monaural tapes, each painstakingly constructed from precisely measured bits of tape, each containing pre-recorded sounds drawn from various categories of sound objects. James Harley (Moorhead State University) discussed his compositional explorations of spatial heterophony, whereby similar streams of sound are presented from spatially separated loudspeakers. The illusion of motion is achieved through the perceptual interaction between these streams.

The technical presentations focused on a number of important issues in the field of sonic spatialization. Mr. Malham introduced an updated version of the Ambisonic technology for diffusing electroacoustic music. This system uses four channels of audio to drive arrays of loudspeakers with the encoding having previously been based entirely on first-order spherical harmonic descriptions of the soundfield. In his more recent work, he has implemented encoding based on higher order harmonics, enabling greater control over distances and image clarity. Corey Cheng (University of Michigan) outlined his team’s research into the development of an interpolation algorithm for Head-Related Transfer Functions (HRTFs). This work, which is built upon actual HRTF measurements, has led to the development of a Graphical User Interface (GUI) which has been used to compose electroacoustic music that takes particular advantage of the spatial cues provided by the HRTF data and the interpolation algorithms. Adopting quite a different approach, Fernando and Jose Ramon Beltran (University of Zaragoza) have developed a virtual space reverberation emulator using a multi-loudspeaker configuration. Impulse response measurements are assigned to each loudspeaker, and this data is used to generate the output signals for each of the four channels. Yon Vissel (University of Texas, Austin) has been working on the application of noncommutative geometry toward audio representations of quantum spaces. The aim is to develop a counterpart to the Euclidean models of objects derived from classical differential geometry. He went on to demonstrate how the sounds may be generated through quantum modifications of standard signal processing operations.

While some of the technical discussions involved elaborate theoretical arguments utilizing mathematics more familiar to engineers or physicists, the demonstrations provided welcome opportunities to listen to spatialized sound in action. Peter Otto (University of California, San Diego) presented his team’s work in developing a real-time spatialization processor and interface using Max/MSP. Based on a physical model for distance simulation, the interface allows for effective adjustment of relevant parameters for multi-channel placement and movement of sound. The demonstration included excerpts of Watershed, by UCSD senior composer Roger Reynolds, a work that has been released on DVD using 5.1 surround-sound audio. Mr. Roads and Alberto de Campo, both of CREATE, unveiled the Creatovox Synthesizer, a performance instrument based on granular synthesis. The numerous parameters for creating and manipulating the sounds are controllable through a variety of interfaces, including MIDI keyboard, joystick, sliders, foot pedals, and computer keyboard and mouse. Mr. De Campo later demonstrated the programming, using SuperCollider, that enables each grain of sound to be individually spatialized.

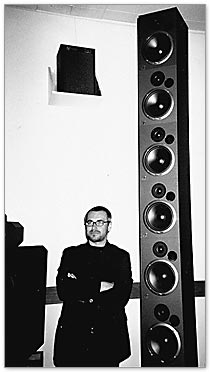

Figure 2. Gerhard Eckel, Sound in Space 2000 Symposium>>

Gerhard Eckel (German National Research Center for Information Technology) has done a great deal of work on sound installations, often making use of spatialized audio. One facet of projected sound that has particularly interested him is the characteristic, frequency-dependent radiation patterns of acoustic sound sources. Mr. Eckel demonstrated his Stele and Viola Spezzata installations, which use recorded and processed viola sounds that are projected from a column of eight loudspeakers (see Figure 2). The sound is processed to produce time-varying projection patterns that are quite lively and able to create a range of spatial effects without surrounding the listener with loudspeakers. Jean-Marc Jot (Emu Creative Technologies) gave an informal presentation of IRCAM’s Spatialisateur software in conjunction with the demonstration by Dan Overholt (Massachusetts Institute of Technology Media Lab) of The Flying Violin, a work for amplified, spatialized violin. "Spat," as it is known, is integrated into the Max/MSP environment, and provides a useful interface for projecting sounds into a multi-channel environment. Mr. Overholt constructed special pickups such that each string of the acoustic instrument could be treated independently. As a result, he was able to produce effects such as circling tones from one string around the octophonic sound system in one direction while circling the sound from a neighboring string in the other. David Eagle (University of Calgary) introduced the aXiO, a novel instrument/controller that is able to control a wide range of parameters, including spatial ones, running MIDI data into MAX. The aim is to give expressive capabilities to the performer, who can move the sound in space or adjust its timbre without needing to rely on a sound engineer at the mixing console or computer.

It is evident, on the basis of the work exhibited at the Sound in Space 2000 Symposium, that there is a great deal of activity in the domain of sound spatialization. As technology develops, and as our understanding of perceptual issues broadens, the treatment of sound as a spatial entity will only become richer. As one participant noted, for the average consumer, surround sound, as marketed in home theater systems, is already a given. The contributions of the researchers who gathered in Santa Barbara will undoubtedly help drive further development and refinement in this exciting domain.